RPA Trial Experience Heuristic Evaluation

Context

In 2020, IBM had just acquired the company WDG Automation, gaining new RPA technologies to help provide enterprise automation services amid the COVID-19 pandemic. One of the first initiatives was launching an IBM RPA Trial Experience that showcased to prospective customers all the capabilities that this product had to offer.

To ensure that the trial would provide a great initial impression to prospective customers, I performed a heuristic evaluation of the experience.

*Heuristic evaluations are intended to be as objective as possible by applying design principles, conventions, and best practices from the industry, most of which apply to human behavior and interface design across interfaces, domains and contexts.

The objective of the heuristic evaluation was to reduce potential issues in a very short a amount of time before users face them.

Participants: 1 User Researcher

Timeframe: 2 days

Heuristic Evaluation

An internal IBM heuristic evaluation guide was used. This guide focused on aspects of SaaS software such as meeting system requirements, navigation and discovery.

Issues found were rated on severity. The issues were either:

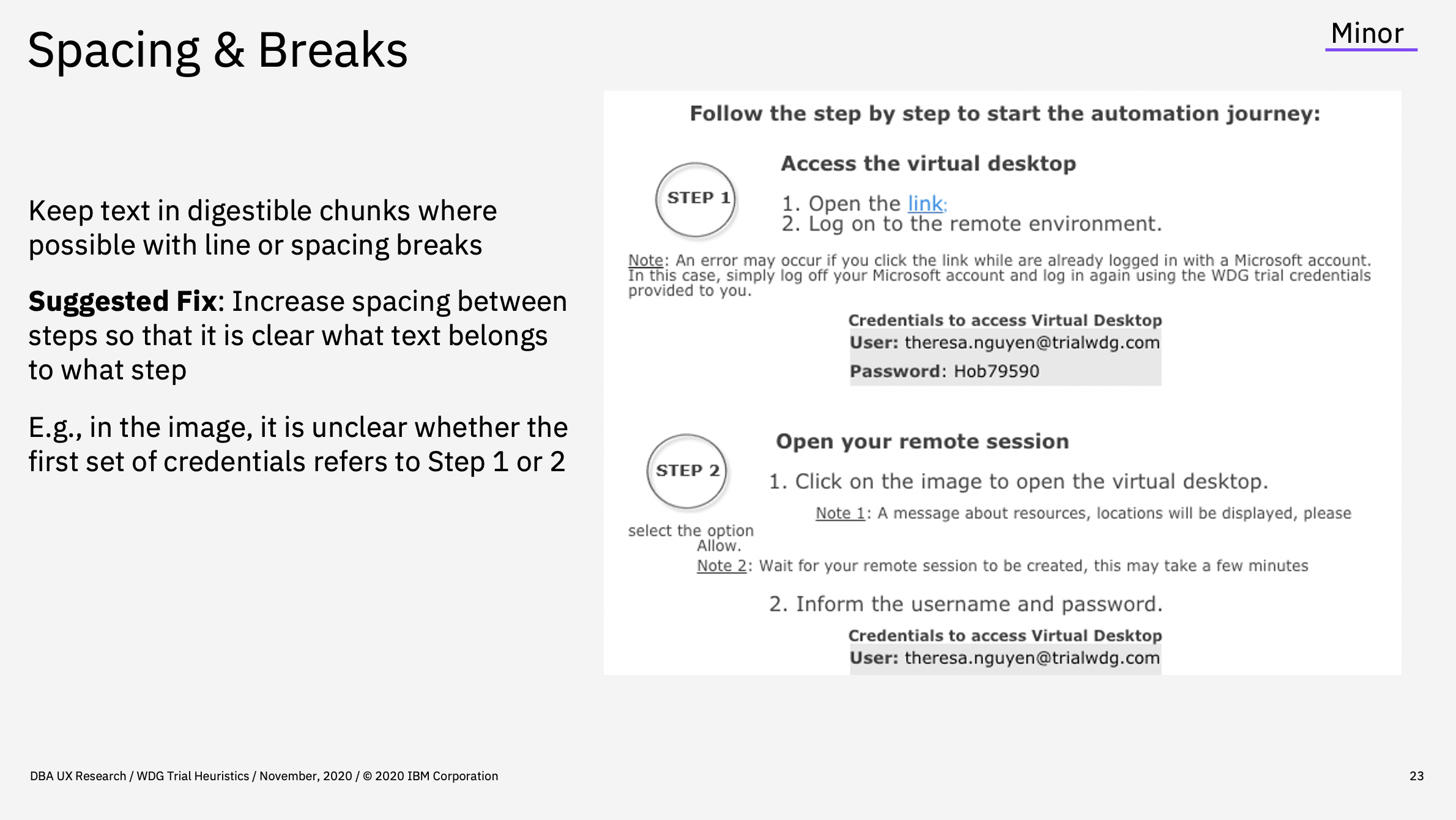

Minor: The issue is present in a single case and does not significantly hinder the user's ability to perform or complete an action or task,

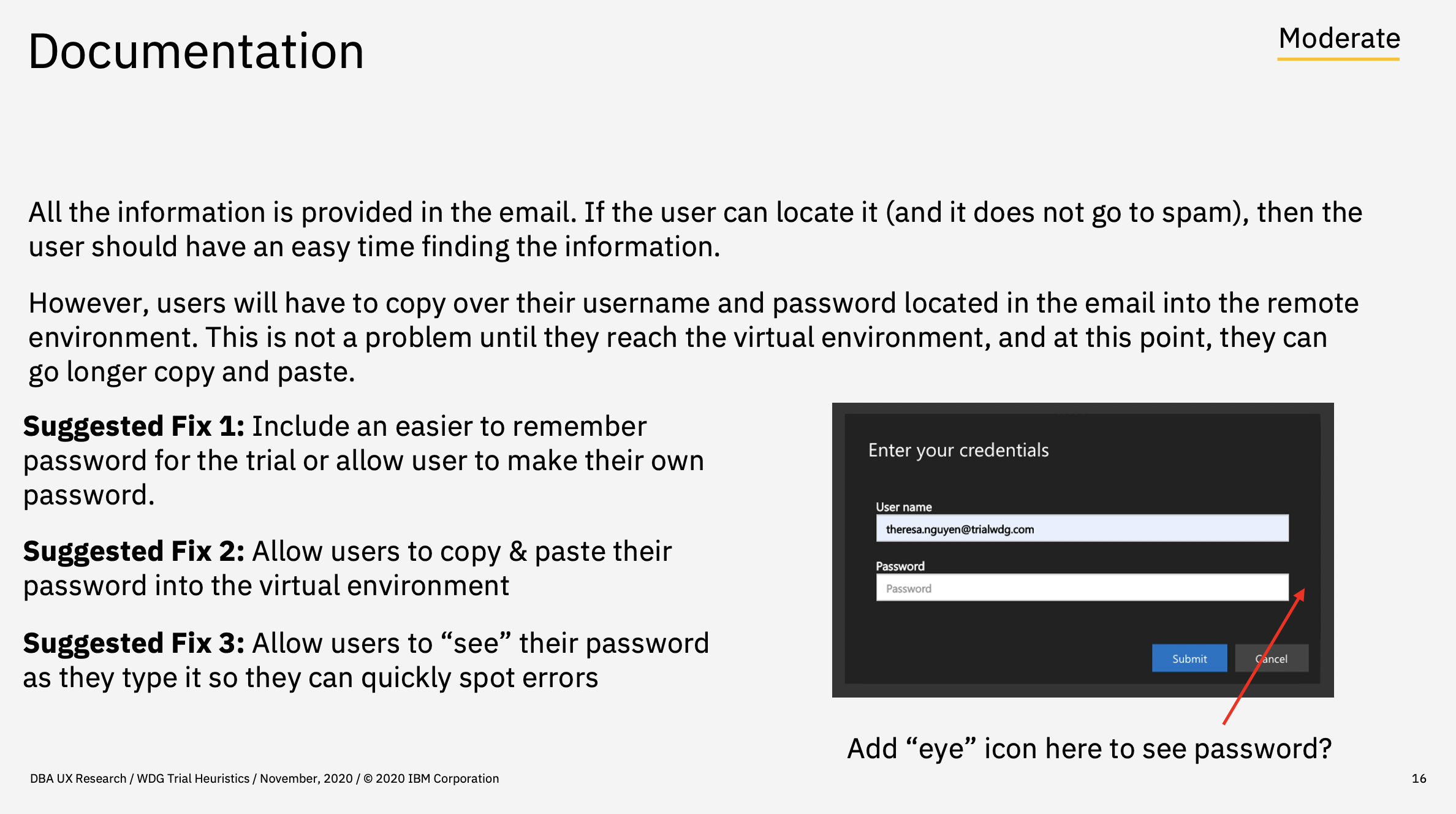

Moderate: The issue is present in two or more cases and does significantly hinder the user's ability to complete the task, or

Critical: The issue is present globally or throughout the documentation and makes a task impossible or highly difficult to complete without further support

For heuristics that did not meet IBM standards, a “suggested fix” was recommended.

Overlying issues were found with logging into the trial experience:

Users are constantly navigating between the email and the virtual environment

The credentials for the log-in are not displayed in a logical manner

The visuals on the page look outdated and need to be updated to reflect IBM branding

Issues were then rated on a severity scale by the researcher

The ratings were based on how feasible the fixes were and how much the issue would impact the user experience

Readout

A readout was then delivered to the team that explained the issues and suggested fixes in detail.

After the suggested fixes were recommended to the marketing team, they were input as issues on GitHub in order to ensure that the suggested fixes would be implemented. An updated version of the trial was released that had most of the recommendations implemented, with the more intensive changes to be made in the upcoming months.